AI Series Part 3 – AI safety and governance framework for ecommerce, marketplace, and digital-native companies

#AIsafety #responsibleAI #productdevelopment #walmart #ikea #productleadership #AIProducts #Governance #compliance #Guardrails

Hello Readers of Thinking category❤️,

If you're visiting this newsletter for the first time, I encourage you to explore our previous posts on the Thinking Category. We're a growing community of 3,500+ enthusiasts eager to learn more about e-commerce, marketing, and category management.

On to the this week post,

What I learned from building a mental and physical wellness conversational-AI product

At the beginning of 2025, I started building AI-native products using AI-powered Platforms. There are platforms like lovable, bolt, Replit – no-code tools that allow anyone to build web applications without coding. This trend is popularly known as vibe-coding.

I began using ChatGPT and other LLMs to observe and analyse my own behaviour, emotional triggers, and impulses post an injury and setback.

My goal was to identify recurring patterns in how I reacted to situations—particularly during moments of stress or pain. I wanted to take a hard, honest look at my own behaviour and intentionally recalibrate my reactions. It was a personal experiment in improving self-awareness, regulating day-to-day emotional responses, and building emotional intelligence. I was using these tools to help design a more intentional, balanced, and emotionally resilient life.

I realized that when it comes to navigating everyday life and dealing with people, you don’t always need a therapist. Often, what you truly need is the ability to understand your own responses and understand them more deeply. That’s where large language models can make a meaningful difference—as thoughtful, accessible wellness companions. This insight led me to build Serenely (An experiment)

Serenely is a conversational, AI-powered mental wellness companion designed to support users in their emotional journeys with empathy, clarity, and self-reflection. It helps individuals recognize behavioral patterns, reflect before responding, and enhance their emotional intelligence in the context of each specific situation. (You can check for more details here but the chat functionality might not work anymore)

In the initial stages of building the product, my focus was primarily on solving user pain points through product features, refining the UI/UX, designing smooth navigation flows, integrating with Supabase, and setting up login and chat functionalities using OpenAI and Gemini APIs. I was addressing real user needs and quickly iterating on features and functionality.

In hindsight, I overlooked a critical user pain-point in building a wellness app: user safety.I overlooked something very critical: establishing a solid AI safety and governance framework from the very beginning. And I wasn't alone—many product folks I knew were focused on features, speed, and scalability, but almost no one was thinking about safety.

This was a serious gap—especially when building for mental wellness. In a product that interacts with users at their most vulnerable, that’s not just a design oversight—it’s a fundamental risk.

Later I got to know that there are companion chatbots in the market and one such bot on app Chai caused suicide of a Belgian Man.

Data privacy, emotional sensitivity, and safeguards against hallucinations aren’t optional—they’re the very core of such products. When you're creating AI tools that engage with human psychology, safety can’t be an afterthought—it’s the foundational layer on which you build your product.

User trust isn’t earned with clean UI or seamless UX. It's earned by making sure the system doesn’t do harm—by being transparent, by handling data with care, and by ensuring the AI communicates responsibly.

This changed how I view product development in the AI age. It’s easy to get caught up in the magic of what these tools can do. But responsible innovation starts with responsible infrastructure—and that includes building for safety, privacy, and responsibility from the day one.

From my own experience of building in AI, I learnt:

No matter what the product is, AI safety must be a fundamental building block from the start.So in this part 3 of AI series, I will try to examine AI safety framework and non-negotiable guardrails that e-commerce and marketplace companies must establish to build responsibly.

Before this, I encourage you to check:

Part 1: I will construct scenarios and examine the likelihood of fully-autonomous agentic-AI taking over how we shop.

Part 2: I will deep-dive into real-world case studies (mainly in ecommerce, marketplace, and digital –native companies) about where and how AI is getting is deployed across workflows.

You might heard of technological singularity - a hypothetical point in time at which technological growth becomes uncontrollable and irreversible, resulting in unforeseeable consequences for human civilization.

With the invention of AI, we might have reached closer to this hypothetical future point.

Stephen Hawking had warned us:

You're probably not an evil ant-hater who steps on ants out of malice, but if you're in charge of a hydroelectric green energy project and there's an anthill in the region to be flooded, too bad for the ants. Let's not place humanity in the position of those ants."

But, wait a minute.

Why is AI safety and governance framework important for business leaders?

Ensuring AI safety might be the responsibility of the tech team, Business is a critical stakeholder because risks such as bias, hallucinations, or unpredictable outputs are no longer theoretical concerns—they have real consequences that can affect revenue, brand reputation, and regulatory compliance.

Imagine that The AI system generating product descriptions occasionally produces hallucinated or inaccurate details — such as claiming a phone has features it doesn’t actually support.

Customer receive the product and felt cheated. They leave negative reviews about the brand. The platform now faces potential legal action for false advertising. Customers lose the trust in the brand. Revenue declines. You got the point :)

While AI might be built by data scientists, category leaders are ultimately responsible for how it impacts business outcomes, customer experience, and brand trust.

That’s why, Business and product leaders must actively engage and ask critical questions around safety to safeguard business continuity.

Speaking of critical, If you haven’t subscribed to the newsletter yet, I encourage you to do so. It’s a great way for me to know that there are readers who find value in what I share.

So how should you build a strategic, actionable roadmap tailored for ecommerce:

Risk assessment and use-case prioritization:

In e-commerce, AI can be applied across a wide range of use cases—but not all carry the same level of risk. The first step is to map these use cases based on their potential impact and associated risks:

a. High-risk, high-impact areas include AI applications in dynamic pricing, credit scoring, personalized recommendations, fraud detection, customer service chatbots, and automated hiring tools. These systems directly affect customer trust, financial performance, and regulatory compliance, making them critical to get right.

b. Lower-risk areas such as inventory forecasting, SEO optimization, and image tagging typically pose minimal risk to customer experience or legal exposure, though they can still drive efficiency and value.

To manage AI deployment responsibly, prioritize use cases using a risk-benefit matrix, focusing first on high-impact systems—particularly those involving sensitive customer data, financial decisions, or areas under regulatory oversight (e.g., GDPR, CPRA, or the EU AI Act). Focus on the most consequential use-case first to maximize business value.

Establish core Pillars of Your AI Safety Framework

A comprehensive AI safety framework for ecommerce or digital applications must be built on five core pillars:

Data governance, privacy and ethics

Fairness & Bias Mitigation

Transparency & Explainability

Robustness & Reliability

Governance, Compliance & Human Oversight

A. Data governance, privacy and ethics

Data governance refers to the management of data quality, consistency, security, and compliance across the organization. It ensures that AI and analytics are built on reliable, accurate, and ethical data—not fragmented, outdated, or biased inputs.

Ecommerce companies handle millions of data points daily—from customer behavior and transactions to inventory levels and supply chain movements. If that data is inaccurate, it will lead to wrong recommendation.

Imagine if Walmart’s AI forecasted demand using outdated sales data, or if different systems stored product data in inconsistent formats. This could lead to stockouts or building unhealthy inventory.

In the context of AI-powered mental wellness conversational app that I was working on, this pillar would mean that conversations between user and chatbot remains secure and confidential, preventing any data leakage.

B. Fairness & Bias Mitigation

AI system may unintentionally treats different group of people unequally —based on factors like gender, age, location, language, or income level. This is demographic disparity. (It could be similar to earlier report of quick-commerce app - Zepto showing different price based on the smartphone that you have, an example of discriminatory pricing). This is Demographic disparity.

Fairness and bias mitigation in ecommerce means constantly monitoring and testing your AI systems to ensure they treat all users equitably, regardless of who they are or where they come from.

To catch and fix such issues, you can use tools like - IBM fairness 360 or Google’s what-if tool. Team can simulate how an AI model performs across different demographic segment.

This is important because these disparities can break customer trust.

{Even though the AI system wasn't designed to treat different groups unfairly, it learns from the data it's trained on. If that data reflects past inequalities, stereotypes, or patterns of exclusion—then the AI may unintentionally repeat or amplify those biases.}

Another example in digital-native companies - Job platforms which are powered by AI recommendations, male users may be disproportionately shown high-paying or technical roles, while female users are more frequently shown lower-paying, clerical, or support roles—and not leadership or executive-level opportunities. This reinforces existing workplace biases and limits equal access to career growth for women working-professionals.C. Transparency & Explainability

Let’s say you're running an ecommerce marketplace like Meesho, Myntra, Nykaa. A seller complains that their product (say, a new Bluetooth speaker) is consistently ranked much lower in search results, even though it has competitive pricing and decent reviews.

Poor visibility means fewer clicks, fewer sales, and potential revenue loss. If the seller escalates the issue, your team needs to explain why the AI model made that decision.

Product search and ranking

The team apply SHAP (SHapley Additive exPlanations) to interpret the model's decision on why the product is ranked low. SHAP breaks down the contribution of each feature to the ranking score.

The SHAP output shows:

⭐ Customer rating contributed positively to ranking (+0.15)

🛠️ Product title relevance was neutral (+0.02)

🚚 Delivery time contributed negatively (-0.20)

📦 Inventory availability also negatively impacted ranking (-0.10)

So this core pillar is about -

Transparency: Making the logic and factors behind AI decisions visible.

Explainability: Providing clear, understandable reasons for those decisions—especially in high-impact or sensitive scenarios.In a McKinsey survey of the state of AI in 2024, 40 percent of respondents ( business leaders) identified explainability as a key risk in adopting gen AI. Transparency & Explainability make the AI systems accountable. And not just another Blackbox.

D. Robustness and reliability

AI sometimes make things up. It is called Hallucination. Why? I was curious to know and found this video with a pretty good explanation.

Answer is - Data quality, Generation method, Input context

Let’s take an example of Ikea - Virtual assistant answering product queries of the customers.

A customer asks, “Is this bookshelf waterproof?” The AI replies, “Yes, it’s water-resistant and safe for outdoor use.”

Reality: The bookshelf is not waterproof and is only meant for indoor use.

Customer buys the product, uses it outdoor and product get damaged. Leads to return, and bad customer reviews.

Hallucination is a business risk.

There have been report of AI chatbot telling users to kill themselves and other harmful suggestions. While these are extreme cases, they highlight the serious risks involved and underscore the importance for businesses to prioritize safety and ethical considerations when deploying AI systems.

E. Governance, Compliance & Human Oversight

AI excels at pattern recognition and scale, and it can interpret context and mimic empathy to some extent, but they lack the human depth of understanding, moral reasoning, and emotional intuition. That’s why a human-in-the-loop (HITL) model is critical.

For an instance - You are a marketplace. During a festive season, there are unexpected delivery delays by sellers. As a category manager, you know that it was not seller controllable. Let’s say, The spike happened due to change in consumer preferences. Sellers should not be accountable for that.

Now, the platform's AI-based seller performance system will automatically tracks these issues and flags the seller as underperforming. Based on thresholds, the AI suspends the seller account to maintain customer experience standards. But that should not be the case.

Point is : Without human insight and oversight, system can penalize genuine cases.

And, The combination of AI efficiency and human judgment reduces false positives.

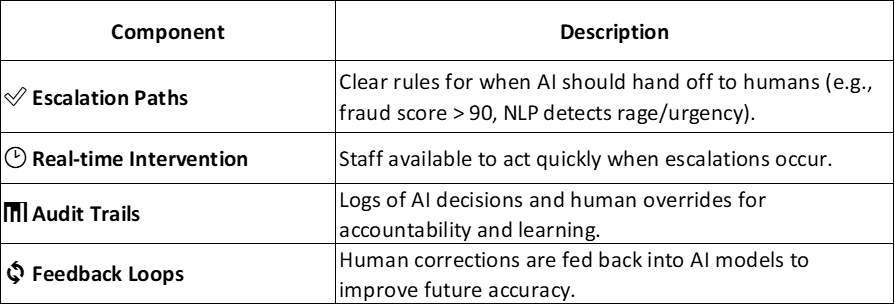

🔑 Key Components of Effective Human Oversight:

I am not getting into legal aspects but needless to say, the framework should still ensure legal compliance and applicable laws.

Implementation, continuous monitoring in place and metrics for success

Once all relevant scenarios have been identified, planned, and documented for your business use-cases, the next step is rigorous implementation to ensure responsible AI deployment.

But it’s not over yet. Continuous monitoring and governance are vital to anticipate risks, correct unintended consequences, and ensure long-term AI performance aligns with business values.

Additionally, a clear and well-documented incident response plan is critical. This plan should clearly outline protocols for handling system failures or harm — such as biased pricing algorithms being deployed — including immediate rollback procedures, internal investigations, stakeholder communication, and long-term corrective actions.

As with any business functions, it’s important to set clear KPIs for AI safety. Lack of measurable goals may result in lack of accountability.

Primary KPIs could be :

AI Incident Rate & Operational Health related metrics- Number of AI-related failures, safety breaches, or unplanned model rollbacks per period

You can set measurable goals like -

Critical incidents: System failures causing service disruption (Target: 0 per quarter)

Safety breaches: Instances where AI outputs violate safety guidelines (Target: <0.1% of total interactions)

Model rollbacks: Emergency reversions due to performance degradation (Target: <2 per quarter)

False positive/negative rates: Accuracy degradation in critical applications (Target: <5% deviation from baseline)

Mean Time to Resolution (MTTR): Average time to fix AI-related incidents (Target: <2

Customer Trust & User Experience specific Primary KPI: Net Promoter Score (NPS) specifically for AI-powered interactions and overall user confidence metrics

There could be KPIs specific to 5 core pillars of AI safety governance framework in place. For example - Explanation request fulfillment: Percentage of user requests for AI decision explanations that are successfully answered (Target: >95%) to ensure transparency and explainability.

Instead of tracking everything and risking analysis paralysis, focus on a small set of metrics that truly matter to your business outcomes.

Let’s explore some existing framework:

While no single global framework exists yet, several significant AI safety initiatives, guidelines, and regulatory frameworks are emerging across governments, industry, and research organizations.

Not all AI companies have fully developed, proprietary safety frameworks for their LLMs—but most serious players implement some form of safety protocols.

You can go through some of these:

I am of this strong opinion that AI safety and responsible AI should not be treated as a cost center but as a revenue-driving function.

Yoshua Bengio, a pioneer of artificial neural networks and deep learning, is advocating to build a Scientist AI whose primary job will be to is to assess the likelihood that another AI's action could cause harm — and intervene to block it if the risk exceeds an acceptable threshold. (Ted Talk) AI companies could integrate a Scientist AI layer into their models to prevent them from taking potentially dangerous actions.

Until the time we have scientist AI, let’s act as our own—building internal safeguards and oversight within the company.

Let me end with a quote from Nick Bostrom, writer of Superintelligence : Paths, Dangers, Strategies and a philosopher known for his work on existential risk,

As a final note, let’s not be the ant that Stephen Hawking refers to. Embed safety guardrails from the get-go. Scale responsibly. To the responsible AI 🎉 Hope I have scared you enough :p

I would love to connect with folks who are setting up AI guardrails in their org. We’ve grown to a community of 3,500+ engaged readers include CXOs and top-management. If you’re looking to promote your business or explore brand sponsorship opportunities, Feel free to DM. Our upcoming posts will focus on sustainability. If this aligns with your brand or business values, it could be a great opportunity for collaboration or sponsorship.

Keep learning, Have a great weekend🎉

Thanks,

Neetu

© 2025 [Neetu Barmecha]. All rights reserved.

This content is the intellectual property of the author. Do not reproduce without permission.